Beth Kujan

Journal of Selected Topics in Quantum Electronics, Vol 28, Issue 6 (2022)

https://ieeexplore.ieee.org/document/9907822

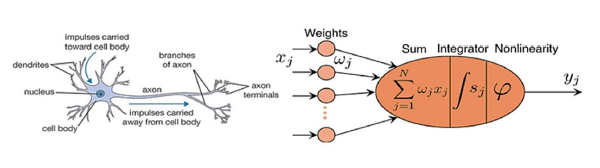

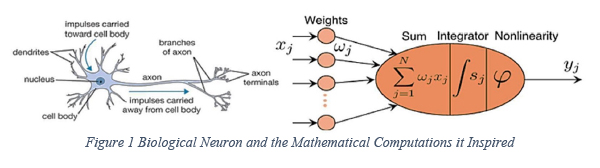

The case for building Scalable Neuromorphic Networks is this: like humans, smarter chips have a larger, tighter neural network. Indeed, neural networks are the current state-of-the-art for machine learning. This isn’t robotics, where a non-sentient arm follows explicit instructions. Instead, machine learning uses algorithms and statistical models to analyze and then draw inferences from patterns in data.

Even a few neurons strung together can do small, impressive things. More is needed though. So, a team from New Jersey (Princeton U, go Tigers!) and Kingston, Ontario (Queen’s University, go Boo Hoo the Bear!) put together a paper covering what it’s going to take to get real scalability for chip-based decision-making. The answer proposed is a novel wavelength-switched photonic neural network (WS-PNN) system architecture. The first part of the paper takes us through the basics, then describes the benefits of flattened scalability and flexibility that comes from integrating selected topologies.

Progress in scalability requires photonic integrated circuit hardware for a host of reasons, the foremost being the speed of light. A neuromorphic computer architecture runs calculations in a parallel, distributed manner, weighs the results of these calculations, sums them, performs a nonlinear operation to the summation before sending the output to many other neurons, eventually coming up with a best-in-class answer. Just like your neurons did when you were in classes, right? Right.

Silicon-based neurons are grouped into layers, with neurons connected only to neurons in adjacent layers. The benefit of a layering neural network architecture is that it enables mathematical tricks of linear algebra which speed up calculations. There are different layer types and topologies to choose from. Each type of neural network excels at solving a specific domain of problems, and each is tuned with hyper parameters that optimize those solutions. (Diversity is good!)

Speaking of diversity, the paper includes a discussion of two types of photonic neurons: non-spiking type with a microring modulator and an external light source, and spiking neuron using excitable lasers. Topology choices include single-group and two-group photonic neurons.

The paper illustrates expanded neural network topologies prior to the weighting of results mentioned earlier, to achieve neural network scalability with a fixed number of wavelengths. The flexibility which comes from mixing different topologies supports a wide range of machine learning (i.e., sophisticated signal processing) applications.

The paper’s focus on the application machine learning leads directly to the use of micro-ring resonators (MMR) in the chip circuit design. Not only are microring resonators used for optical signal processing in a neural network, but they can also provide for reconfigurable switching.

Reconfigurability moves silicon photonics along a path like the electronic specialty chip (ASIC) evolving into a Field Programable Gate Array (FPGA). Let’s face it: the advantages of programmability are decisive when systems grow in complexity. Also, specialty chips are expensive and take ages to fabricate. Scalability with a fixed number of wavelengths may be just the ticket.

The paper proposes the adoption of wavelength selective switches (WSS) inside what’s called the broadcasting loop for a wavelength-switched photonic neural network (WS-PNN). The WS-PNN architecture can support the interconnection of many photonic neural networks through connecting multiple PNN chips with off-chip WSS.

The WS-PNN architecture is expected find new applications of using off-chip WSS switches to interconnect groups of photonic neurons. The interconnection of WS-PNN can achieve unprecedented scalability of photonic neural networks while supporting a versatile selection of mixture of feedforward and recurrent neural network topologies.

~*~*~*~*~

Now that it’s just you and me at the end of this article, I’ll share what I think is the most fun part. It’s the US DOD program that funded it: PEACH = Photonic Edge AI Compact Hardware. Go Princess Peach! A mascot for the ages!